The Intelligent Cabin Management System (ICMS) as a solution for the aviation and rail industries, has been undergoing continuous advancements with emerging technologies and capabilities.

Thanks to ever-improving facial recognition algorithms and their implementations, artificial intelligence (AI) has vastly improved efficiencies and reliabilities in domains such as user authentication, behavior analysis, safety, protection, threat detection, and object tracking. In addition, monitoring vital signs of passengers onboard is taking precedence.

We, at Cyient Limited, have developed an Intelligent Cabin Management Solution (ICMS), capable of carrying out cabin operations with a focus on passenger safety, security, and health. While this application is suitable for all kinds of passenger cabins in aircraft and rail, it is especially suited for Urban Air Mobility (UAM) applications where four- to six-passenger aircraft would incrementally graduate from initial single-pilot-operated platforms to completely autonomous ones, thus gradually replacing human element inside passenger cabins.

Cyient’s ICMS can perform the following tasks during flight operations:

To meet the above objectives, the ICMS application has been designed to integrate software modules for face detection, face identification, gesture recognition and object detection, identification and tracking, and radar-based vital sign detection.

How it works

A passenger's credentials are captured as soon as a booking is made for a journey by aircraft/train/UAM by providing appropriate identification documents and pictures. These credentials are stored in the customer database to be used to identify the passenger when she/ he reports for boarding. At the time of boarding, their credentials and facial captures through onboard cameras are matched to authenticate their entry into the cabin. The application maps all passenger-occupied seats against seat allocations made during the reservation process and raises cautionary flags in case wrong seating is detected. It detects objects carried by passengers and raises prohibitory alarms to dissuade the carrying of banned items into the cabin. The application identifies items that are permitted but could be used for aggression to harm fellow passengers, and categorizes them appropriately for continuous tracking during the journey. It raises cautionary flags when such objects are taken out during the flight. The application continuously monitors facial gestures of passengers to identify and detect those which can be classified as offensive to raise timely cautionary flags to pilot/operations control.

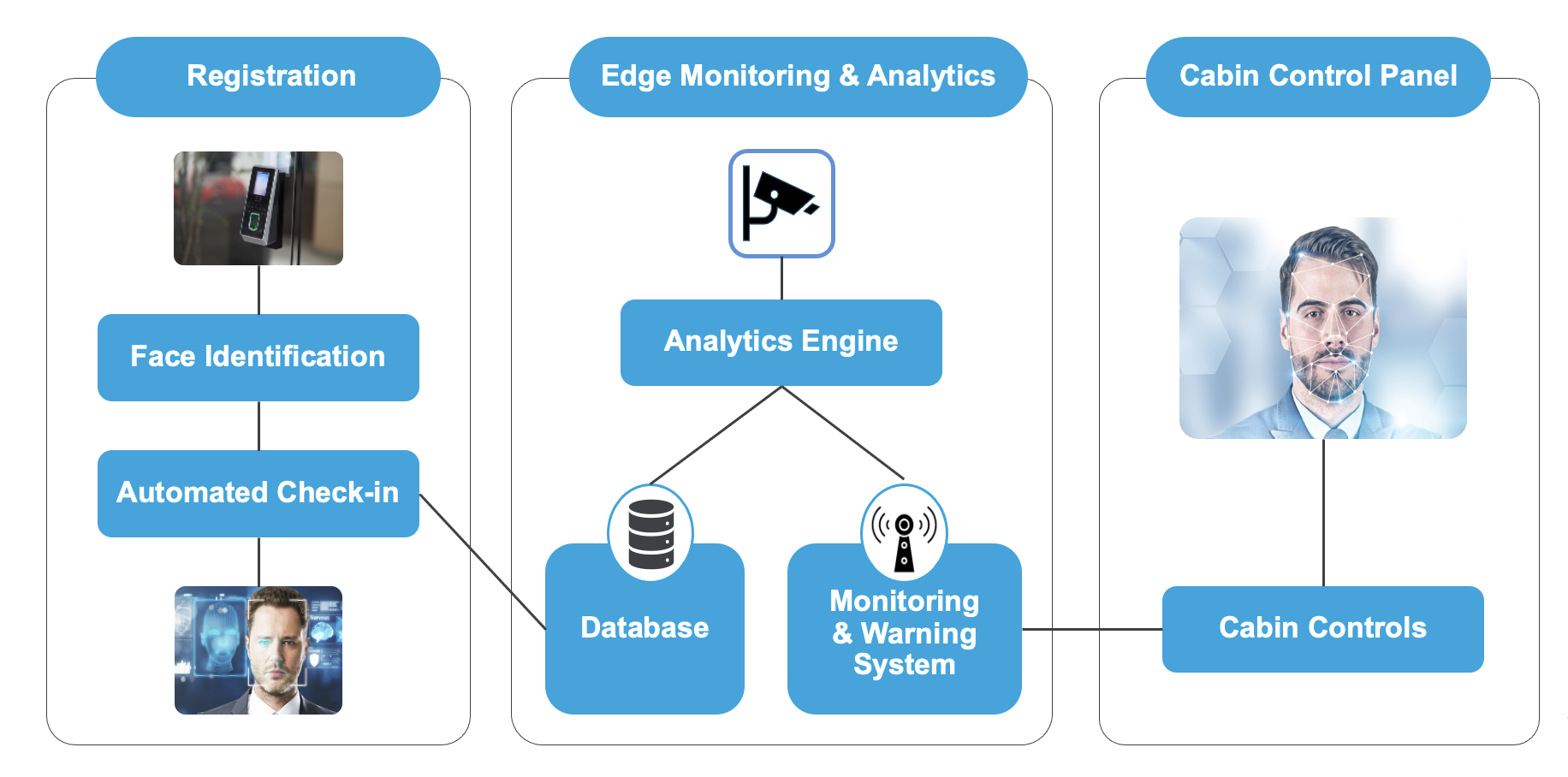

Figure 1: ICMS architecture

Understanding the product and identifying applicable standards as per the intended purpose of the product.

Identifying applicable regulatory requirements and preparation of regulatory assessment report.

Compiling the technical documentation, product registration, and launch.

The sequence of processes followed for solution implementation are:

This involves one-to-one matching of the individual’s captured image with those in the library which were provided by the customer during booking as reference images.

This involves labeling faces in a video/image.

At this stage, noise and other irrelevant aspects are removed from the captured image to let the algorithm match and recognize an individual out of multiple photographs in the database.

An individual’s face capture is processed at this stage to determine if he is wearing a COVID mask or not. This is applicable when COVID-appropriate behavior monitoring is to be implemented inside the passenger cabin.

In this stage, each passenger is scanned on entry into the cabin, to detect all objects being carried in. The system matches and identifies objects classified as permitted but fall in likely-offensive category, and continuously tracks their location and raises cautionary flags if lifted during the flight.

Here, facial expressions of an individual are processed to determine if his gestures are aggressive or not.

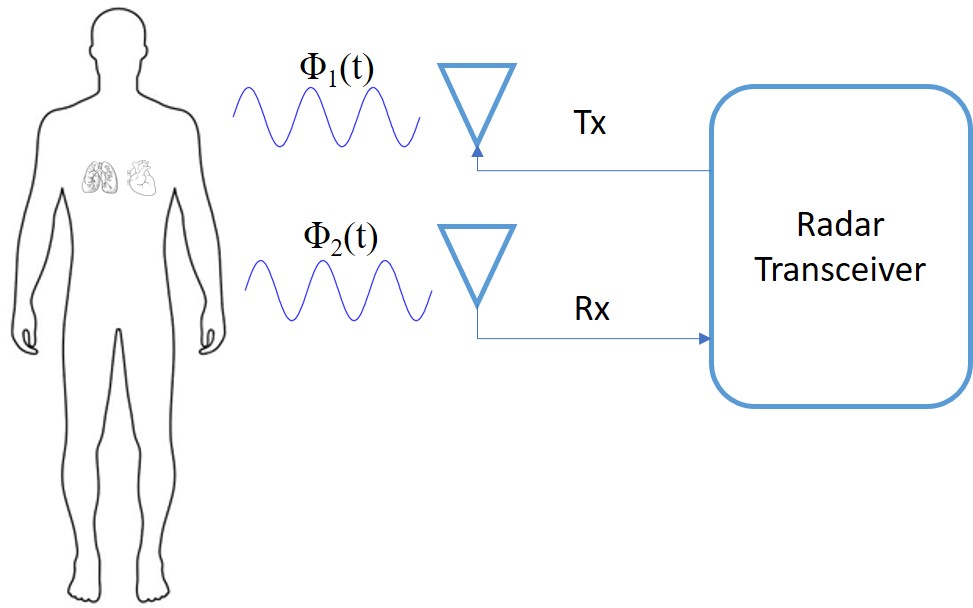

This is a contactless process and maintains complete privacy of individuals. Radar sensors are used to detect the heart rate and breathing rate of passengers.

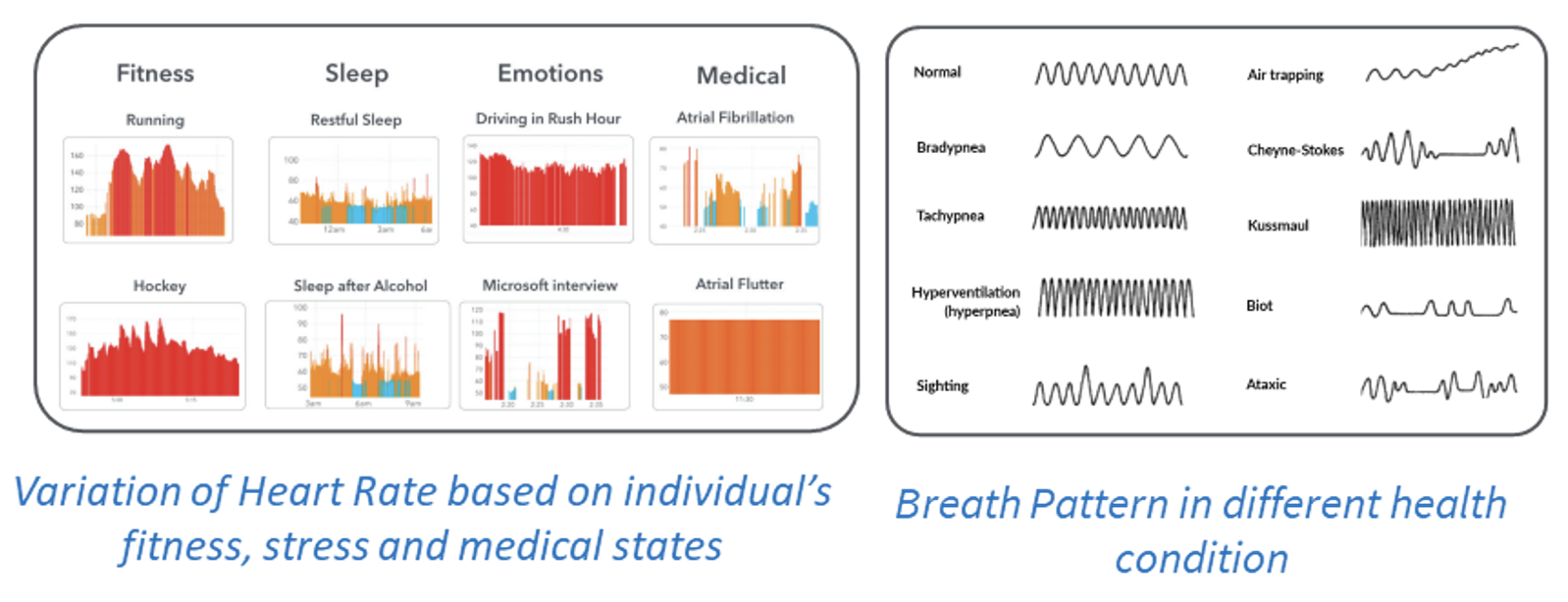

Further analysis of the health condition of the patient based on heart rate and breathing rate can be carried out using application software.

The concept of radar health monitoring is explained in Figures 2 and 3 below.

Figure 2: Heart rate monitoring using radar

Figure 3: Typical variation of heart rate and breathing pattern based on different health conditions

Face detection systems are becoming increasingly vital for implementation of security measures in all walks of life. Challenges lie in accurate detection of face(s) in a captured image as minutely varying environmental attributes such as variations in ambient light, occlusions, variations in position/posture, changes in backdrops, and aging may severely impact detection leading to completely missed faces in a captured shot.

Despite environmental variations, a reliable and accurate face recognition system should be able to detect and identify a face irrespective of existing conditions and ambience. Challenges lie not only in accurate identification but also identifying facial gestures and categorizing them appropriately. As the range of applications expands day by day, the system's complexity increases manifold and hence adds to the burden of making detection accurate.

Factors that affect accurate detection are of two broad types:

Developing a highly reliable face recognition system requires incorporating latest technological developments with special attention to every minute detail captured by monitoring systems. As real-world data is limited, expensive, and time-consuming to collect, retrieving the right data to curate, label, train, test, and validate such systems becomes even more challenging.

Another challenge is to maintain the accuracy of heart rate and breathing rate measurements of individuals in the presence of any other moving parts which may be present in a complex cabin scenario. A detailed model for training the algorithms is essential for greater accuracy and hence usefulness of the radar data.

Oil and gas transmission via pipelines is a tightly regulated and closely monitored global operation. Due to the extensive geographical coverage, significant risks are involved.

Pipeline operators have slim profit margins and multiple challenges to manage.

The safety of the pipeline network is thus a top priority for any operator, not just due to fines and penalties but also because of potential operational blockages. Any interruption in product transmission or incidents in the field can lead to financial and reputational loss. The use of Geo-AI can help reduce the chances of such incidents occurring by:

A cloud-based solution to accelerate the regulatory compliance process. It helps to search worldwide regulations, and offers a digitized form regulation database for easy search and analysis. The standard module consists of a library of 1500+ international standards such as ISO/IEC/AAMI.

The solution offers a device classification tool, device-specific compliance, and regulatory intelligence services such as regulation assessment, gap assessment, and impact analysis.

Its regulatory watch feature monitors changes in regulations and provides a personalized news feed to users consisting of regulations news, safety communication, and warning letters.

Facial recognition systems are in great demand and require a high level of accuracy and reliability. As facial images are often captured in their natural environment, backgrounds can be complex, with drastic variations in illumination. These systems therefore necessitate careful mitigation of challenges due aging, occlusions, degree of illumination, variation in resolution, expression, and poses. Also, with increasingly complex lifestyles, temporal changes in health parameters are critical and need to be monitored onboard for taking autonomous decisions on UAM emergency landing or rerouting to the nearest healthcare facility.

All these challenges can be addressed through appropriate technology and algorithms, opening up opportunities for innovations in future.

Ajay Kumar Lohany is an aeronautical engineer with specialization in avionics systems. He holds a master’s degree in computer science and modeling and simulation. He has served in the Indian Air Force as a flight test and instrumentation engineer. With over 32 years of industry experience, he takes keen interest in building technological solutions that help solve problems in the aerospace and rail domains.

Ranadeep Saha is an Electronics & Communication Engineer with specialization in Microwave & Radar technologies and systems. He has over 20 years of industry experience in Microwave and Radar system research, design & development. He has primarily worked in design & development of radars and other microwave systems for detection, sensing and tracking, initially as a design engineer, through function lead, into R&D head for radars. He has extensive experience in the application of microwave & radar technology in defence, space, government and commercial markets.

Cyient (Estd: 1991, NSE: CYIENT) is a consulting-led, industry-centric, global Technology Solutions company. We enable our customers to apply technology imaginatively across their value chain to solve problems that matter. We are committed to designing tomorrow together with our stakeholders and being a culturally inclusive, socially responsible, and environmentally sustainable organization.

For more information, please visit www.cyient.com

Cyient (Estd: 1991, NSE: CYIENT)delivers Intelligent Engineering solutions for Digital, Autonomous and Sustainable Future

© Cyient 2024. All Rights Reserved.