Intelligent Engineering

Our Intelligent Engineering solutions across products, plant and networks, combine our engineering expertise with advanced technologies to enable digital engineering & operations, develop autonomous products & platforms, and build sustainable energy and infrastructure

.png?width=774&height=812&name=Master%20final%201%20(1).png)

Distributed Computing Transforming Operations in UtilitiesDistributed computing can unlock new levels of scalability, reliability and savings in the utility industry

CyientDistributed Computing Transforming Operations in UtilitiesDistributed computing can unlock new levels of scalability, reliability and savings in the utility industry

Abstract

This white paper explores the application of distributed computing in the utility industry, demonstrating how this innovative computational technique can enhance efficiency, reliability, scalability, and cost-effectiveness. Through a detailed examination of the principles, advantages, disadvantages, examples, and use cases, we aim to provide insights into the potential benefits and challenges of implementing distributed computing in the utility sector.

Key Objectives

Efficiency enhancement

We scrutinize how distributed computing orchestrates multiple interconnected nodes to perform tasks in parallel, unlocking unprecedented processing power. By distributing workloads across a network of computers, the utilities industry can achieve remarkable computational speeds, significantly reducing processing times for complex tasks.

Reliability and fault tolerance

We investigate the fault-tolerant nature of distributed computing systems, emphasizing their resilience to node failures. In the utilities sector, where uninterrupted operations are paramount, understanding how distributed computing mitigates risks and ensures continued functionality becomes pivotal.

Scalability in utilities operations

We explore how distributed computing facilitates seamless scalability in utility operation and accommodates the ever-growing demands of the utility industry.

Cost-effectiveness

By leveraging off-the-shelf hardware and compatible open-source software or pay-as-you-go cloud platforms, distributed computing models promise to deliver enhanced capabilities at a fraction of the cost compared to traditional monolithic systems.

Access Whitepaper

Introduction

The utility industry stands at the intersection of escalating demand, technological evolution, and the imperative for operational optimization. In this dynamic landscape, where torrents of data flow incessantly and the need for precision in processing and analysis has never been more critical, the application of cutting-edge computational methodologies becomes not just advantageous but essential. Among these methodologies, distributed computing is emerging as a potent force, capable of reshaping the very fabric of utility operations.

The utility sector operates in an environment characterized by vast datasets, diverse operational facets, and a relentless demand for precision and efficiency. Traditional computing models, often strained by the sheer scale and intricacy of utility operations, fall short in meeting these demands. Recognizing these challenges, the industry is increasingly turning to distributed computing as a strategic lever to unlock new levels of efficiency, reliability, scalability, and cost-effectiveness.

Distributed computing, at its core, epitomizes the synergy achieved when multiple interconnected computer systems unite to tackle intricate challenges. This collaborative approach allows for the dissection of complex problems into manageable components, distributed across a network of nodes. As the utility industry grapples with the enormity of tasks ranging from grid management to resource optimization, the divide-and-conquer philosophy of distributed computing presents a compelling solution.

This white paper undertakes a comprehensive exploration of distributed computing within the context of the utility industry. Our primary objective is to unravel the transformative potential embedded in the principles and applications of distributed computing, elucidating how this computational paradigm aligns with and augments the multifaceted landscape of utility operations.

We delve into fundamental concepts ranging from the basic tenets of task segmentation and collaborative problem-solving to the intricacies of communication protocols and the diversity of architectural models to guide readers through the complexities of distributed computing.

We also examine the potential impact of distributed computing on utilities, and how it is poised to revolutionize the industry, offering not just incremental improvements but a paradigm shift in the way utilities process, analyze, and manage their vast datasets and operational workflows.

From addressing the industry-specific challenges to presenting tangible scenarios where distributed computing becomes a game-changer, this white paper aims to provide a holistic perspective on the integration of distributed computing into the utility industry, and equip industry stakeholders to navigate the evolving landscape of utility operations in an era defined by data abundance and computational innovation.

Distributed Computing Overview

What is Distributed Computing?

In the vast landscape of computational methodologies, distributed computing emerges as a beacon of innovation, embodying collaboration and synergy to address challenges that transcend the capacity of a solitary computing entity. At its core, distributed computing orchestrates the collective efforts of multiple interconnected nodes, strategically dividing formidable tasks into more manageable subtasks. This collaborative approach is key to handling tasks of colossal magnitude or intricacy, a typical characteristic of the utility industry where the need for efficient processing and analysis of substantial datasets is critical.

Whether optimizing energy distribution across grids, managing real-time data analytics for predictive maintenance, or enhancing the reliability of the overall system, the collaborative nature of distributed computing becomes an indispensable asset for utilities. This section examines the transformative impact of distributed computing in utilities, illustrating its applicability to tasks that are inherently too large or complex for a single computing entity.

How does Distributed Computing Work?

Distributed computing operates on a foundation of strategic collaboration and parallelism, where the whole exceeds the sum of its parts. This section delves into the intricate workings and principles that enable interconnected nodes to function as a cohesive unit.

Role of interconnected nodes

Central to distributed computing is the concept of interconnected nodes. These nodes, spanning diverse computing devices such as servers, smartphones, and IoT devices, act as individual entities within a network. Their collaboration is orchestrated to collectively solve complex problems, leveraging their individual processing power. Understanding the pivotal role of interconnected nodes is fundamental to comprehending the dynamics of distributed computing

Task segmentation

Task segmentation is a strategic cornerstone in the distributed computing framework. This involves the meticulous breakdown of large tasks into smaller, more manageable subtasks. Each node within the network is assigned a specific subtask based on its programmed responsibilities. This division of labor facilitates parallel processing, allowing nodes to concurrently address their designated portions, ultimately contributing to the comprehensive solution of the overarching task.

Communications protocols

Efficient communication is the lifeblood of distributed computing. Nodes must share information, synchronize activities, and collectively contribute to the overall task. The discussion encompasses the nuances of communications protocols that enable nodes to exchange messages, ensuring coherence and alignment in their collaborative efforts.

Architectural models

Distributed systems manifest in various architectural models, each tailored to specific requirements. Here we look at four prominent models, and their unique characteristics:

Client-server model

Divides tasks between clients and servers, where clients initiate requests and servers fulfill them.

Three-tier model

Organized into presentation, application, and data storage tiers, this model distributes specific functions across the system.

N-tier model

A more intricate model involving multiple layers, each assigned specific functions, offering increased system complexity.

Peer-to-peer model

A decentralized architecture where each node shares equal responsibility, representing a collaborative approach without centralized control.

Advantages of Distributed Computing in Utilities

In the utility industry, where data flows ceaselessly, operations are multifaceted, and precision is paramount, the adoption of distributed computing offers unmatched advantages in efficiency, reliability, scalability, and cost-effectiveness. This section also provides insights into the transformative impact of distributed computing.

Efficiency

Computational speed and reduced latency

Distributed computing operates as a catalyst for computational acceleration within utilities. By harnessing the parallel processing capabilities of interconnected nodes, large-scale tasks are broken down into smaller subtasks, allowing multiple nodes to work concurrently. This collaborative approach translates into a substantial enhancement in computational speed, enabling utilities to process vast datasets and execute complex algorithms with unprecedented efficiency.

Reduced latency is another notable efficiency boon. With tasks distributed across a network of nodes, the proximity of computation to the data source minimizes the time it takes for information to traverse the network. This reduction in latency is particularly vital in real-time applications, such as grid management and demand forecasting, where timely responses are critical.

Increased bandwidth and optimized resource utilization

Distributed computing optimizes bandwidth utilization by distributing the data processing load across the network. This approach mitigates the strain on individual nodes and ensures a more balanced and efficient use of available resources. Through this optimized resource utilization, utilities can extract maximum performance from their existing infrastructure, achieving operational efficiency without the need for extensive hardware upgrades.

Example: In a utilities scenario, distributed computing can enhance the efficiency of demand response systems. By breaking down the intricate task of analyzing real-time energy consumption data into subtasks distributed among nodes, the system can respond swiftly to fluctuations in demand, optimizing energy distribution and resource allocation in real-time.

Reliability

Fault-tolerant frameworks

The utility sector demands unwavering reliability to ensure uninterrupted service delivery. Distributed computing systems are inherently fault-tolerant, designed to withstand disruptions caused by individual node failures. In a distributed environment, the failure of one node does not jeopardize the entire system. Instead, the workload is rerouted to functioning nodes, ensuring continuous operations and minimizing the impact of potential failures.

Example: Consider a utility's grid management system utilizing distributed computing. If a node responsible for monitoring a particular section of the grid experiences a failure, the system seamlessly redistributes the monitoring task to other nodes. This fault-tolerant approach guarantees that grid operations remain robust and unaffected by isolated failures.

Scalability

Seamless handling of increasing workloads

The utility industry experiences fluctuations in workloads, especially as demands for energy, data processing, and real-time analytics evolve. Distributed computing systems exhibit remarkable scalability, offering a straightforward solution to handle increasing workloads. The addition of nodes to the existing network allows utilities to effortlessly scale their computational capabilities in response to growing demands, ensuring a flexible and adaptive infrastructure.

Example: In a utilities scenario, such as energy distribution during extreme weather conditions, the computational demands on the system can spike. Distributed computing allows for seamless scalability by incorporating additional nodes during peak periods. This ensures that the system can efficiently handle the increased workload, guaranteeing continuous and reliable energy distribution.

Low Cost

Compatibility and utilization of cost-effective infrastructure

Distributed computing infrastructure is designed to be cost-effective. It capitalizes on off-the-shelf, commodity hardware, making it compatible with a wide array of devices already in circulation within utility operations. Moreover, the utilization of open-source software or pay-as-you-go cloud platforms boosts the cost-effectiveness of distributed computing models, delivering enhanced capabilities without exorbitant investments.

Example: A utility company implementing distributed computing for predictive maintenance can leverage cost-effective commodity servers. By utilizing open-source predictive analytics software, the company can achieve a high level of sophistication in monitoring equipment health without the need for proprietary and costly solutions.

In essence, the advantages of distributed computing in the utility industry extend beyond mere operational enhancements. They represent a strategic evolution toward a more resilient, adaptable, and cost-conscious utility infrastructure, poised to meet the ever-changing demands of a dynamic industry landscape.

Examples of Distributed Computing in Utilities

As operational complexities of the utility industry grow, distributed computing emerges as a linchpin, seamlessly weaving through diverse facets to optimize efficiency, enhance reliability, and cater to the ever-growing demands of data processing. This section presents examples and use cases where distributed computing plays a transformative role in utility operations.

Networks

Local area networks (LANs) and internet-based systems

Distributed computing forms the backbone of local area networks and Internet-based systems within the utility sector. It facilitates seamless data exchange, remote collaboration, and resource sharing. Whether coordinating grid management tasks or ensuring real-time communication between utility entities, distributed computing empowers the industry to operate cohesively and respond dynamically to evolving demands.

Example: In a utilities company, distributed computing is employed within local area networks to facilitate collaborative efforts among geographically dispersed teams. Engineers, analysts, and operators can collectively contribute to real-time monitoring and decision-making processes, ensuring the efficient management of utility resources.

Telecommunications Networks

Transmission of data, voice, and video

Telecommunications networks rely heavily on distributed computing to ensure the swift and efficient transmission of data, voice, and video over vast distances. By distributing the processing load across interconnected nodes, distributed computing enhances the reliability and speed of telecommunications infrastructure within the utility sector. This ensures seamless communication between utilities, enabling swift response to contingencies and efficient coordination of operations.

Example: Distributed computing in telecommunications networks allows utilities to transmit real-time data from remote sensors and monitoring devices. This is crucial for maintaining situational awareness, optimizing grid operations, and promptly addressing any issues that may arise.

Real-Time Systems

Prompt logical computations

Real-time systems in utilities demand prompt and accurate computations for tasks such as energy demand forecasting, fault detection, and response to grid fluctuations. Distributed computing plays a pivotal role in ensuring the timeliness of these computations by distributing the workload across interconnected nodes. This enhances the responsiveness of real-time systems, providing utilities with the agility needed to make timely decisions.

Example: In a utilities scenario, a real-time energy management system employs distributed computing to analyze data from smart meters and sensors across the grid. This enables the system to make instantaneous decisions on energy distribution, ensuring a balanced and optimized grid operation in response to changing demand patterns.

Parallel Processors

Complex computational tasks

Parallel processing, a form of distributed computing, finds application in utilities for handling complex computational tasks. By dividing these tasks into smaller subtasks processed concurrently by multiple nodes, parallel processors significantly reduce the run time. This is particularly valuable for utilities engaged in simulations, optimization, and large-scale data analysis.

Example: A utilities company may utilize parallel processors to simulate and optimize the performance of its energy distribution network. By breaking down the complex computations into parallel tasks, the system can quickly assess various scenarios and make informed decisions to enhance the efficiency of the grid.

Distributed Database Systems

Managing data across multiple interconnected databases

Distributed database systems leverage distributed computing to manage data across multiple interconnected databases in the utilities sector. This approach enhances data availability, scalability, and fault tolerance. By distributing data processing across nodes, utilities ensure consistent and reliable access to critical information, facilitating streamlined operations.

Example: In utility operations, a distributed database system may be employed to manage and analyze data from various sources, such as customer information, energy consumption patterns, and equipment health. This ensures that decision-makers have access to a unified and up-to-date view of essential data for informed decision-making.

Distributed Artificial Intelligence

Processing large-Scale datasets for advanced decision-making

Distributed artificial intelligence (DAI) is a subfield where multiple AI-powered autonomous agents collaborate to extract insights and predictive analytics from large-scale datasets. In utilities, DAI enhances decision-making by efficiently processing vast amounts of data. This collaborative approach not only expedites analytics but also enhances the interpretability of AI models.

Example: In utilities, DAI can be applied to process data from smart grids, weather patterns, and historical energy consumption. By leveraging the collective intelligence of distributed agents, utilities can gain deeper insights into grid behavior, optimize energy distribution, and make data-driven decisions for improved overall efficiency.

These diverse examples and use cases show that distributed computing is not just a theoretical concept but a practical and transformative force within the utilities industry. By seamlessly integrating into various operational aspects, distributed computing empowers utilities to navigate challenges, embrace opportunities, and operate in a dynamic and data-driven environment.

The Cyient Thought Board

What advantages does distributed computing offer to the utility industry?

Efficiency

- • Computational acceleration through parallel processing

- • Reduced latency for timely responses

- • Optimized resource utilization for efficiency

Reliability

- • Fault-tolerant frameworks to ensure uninterrupted service

- • Example: Grid management remains robust despite node failures

Scalability

- • Handles fluctuating workloads seamlessly

- • Example: Incorporates nodes for peak demand periods

Low Cost

- • Compatible with existing infrastructure

- • Utilization of open-source or pay-as-you-go platforms

- • Example: Predictive maintenance with cost-effective servers

Challenges and Avenues for Further Research

While the advantages of distributed computing in utilities are evident, acknowledging challenges is integral to further innovation. The complexity inherent in distributed systems demands meticulous attention to communications protocols, maintenance procedures, and security measures. As utilities embrace this transformative computational paradigm, challenges and avenues for further research and development need to be addressed.

Complexity management

As distributed systems grow in complexity; effective management becomes paramount. Future research can delve into simplifying the deployment and maintenance of distributed computing systems, mitigating the risk of human error and system vulnerabilities

Security enhancement

The expansive nature of distributed systems increases the attack surface, necessitating robust security measures. Ongoing research can focus on enhancing the security protocols within distributed computing, safeguarding utilities against potential cyber threats.

Conclusion

The potential benefits of implementing distributed computing in the utilities industry are far-reaching. From streamlining real-time operations to enhancing the reliability of critical communication networks, distributed computing positions itself as a transformative force capable of meeting the evolving needs of this dynamic sector. The utility of distributed computing extends beyond theoretical advantages, manifesting in tangible improvements across various operational domains. As utilities strive for more resilient, adaptive, and data-driven operations, the integration of distributed computing becomes not just advantageous but imperative. The collaborative nature of distributed systems aligns seamlessly with the collaborative and interconnected world of utility operations, promising a future where computational resources are harnessed optimally to meet the demands of a changing landscape.

About the Author

Vijay is a decision analyst and digital transformation and sustainability reporting practitioner at Cyient. He helps customers realize business value with his expertise in designing business processes to improve customer experience, increase profitability, and provide a competitive edge. He has extensive experience leading circularity and implementing digital readiness on sustainable business models, product and service portfolios, market and customer access, value chains and processes, IT architecture, compliance, organization, and culture.

About Cyient

Cyient (Estd: 1991, NSE: CYIENT) partners with over 300 customers, including 40% of the top 100 global innovators, to deliver intelligent engineering and technology solutions for creating a digital, autonomous, and sustainable future. As a company, Cyient is committed to designing a culturally inclusive, socially responsible, and environmentally sustainable Tomorrow Together with our stakeholders.

For more information, please visit www.cyient.com

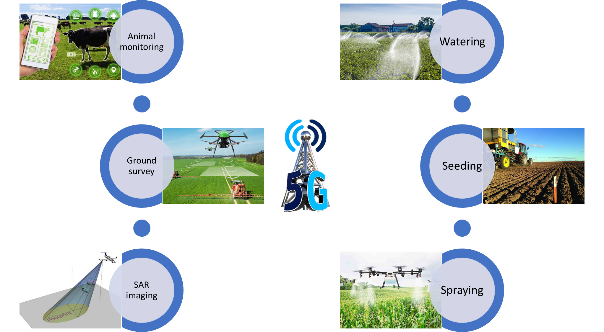

5G technology in agriculture

The advent of 5G technology will revolutionize global farming landscapes and will open up multiple ways to establish and grow precision farming. The figure below shows that every element in modern agriculture once connected to a high speed and high throughput 5G cellular network, works in tandem with the other to optimize resources and maximize yield. The imagery generated from SAR and GPR demand throughput for transferring them to a distant and central location/data cloud. Similarly, to control farming equipment remotely, a low latency communications network in inevitable.

Figure 10. Uses of 5G technology in agriculture

Future of Hyperautomation

Hyperautomation will continue to evolve and redefine industries. Here are a few trends that could shape its future:

Hyperautomation as-a-service

Cloud-based hyperautomation platforms will become more accessible, allowing organizations of all sizes to leverage automation as a service. This democratization of technology will drive innovation across sectors.

Human-automation collaboration

Rather than replacing humans entirely, hyperautomation will focus on enhancing human capabilities.

Industry-specific solutions

Hyperautomation will be tailored to meet the specific needs of different industries. We can expect specialized solutions in sectors like healthcare, manufacturing, telecom, energy, and utilities addressing industry- specific challenges and requirements.

Enhanced cognitive capabilities

Advances in AI, ML, and Gen AI will lead to even more sophisticated cognitive capabilities, enabling systems to handle complex decision-making and problem- solving tasks.

IoT integration

IoT will become more tightly integrated with hyperautomation. Sensors and data from connected devices will be used to optimize and automate processes in real time.

Cross-industry collaboration

Industries will increasingly collaborate and share best practices for hyperautomation implementation. This cross-pollination of ideas will accelerate innovation and adoption.

Regulatory frameworks

Governments and regulatory bodies will establish frameworks to address the ethical and legal implications of hyperautomation, ensuring a responsible and fair use of the technology.

In the future, we can expect to see even more changes in the way hyperautomation is used and implemented. Advances in IoT, blockchain, and quantum computing will open opportunities for hyperautomation to be applied in new domains and enable it to automate highly complex tasks and processes.

About the Author

Pankaj Sahu spearheads the enterprise asset management (EAM) practice for electric, gas, and water utility technology solutions at Cyient. With a wealth of experience spanning over 20 years, he has an established track record in implementing and consulting EAM and APM solutions for clients in the utility, transportation, and energy sectors worldwide.

About Cyient

Cyient (Estd: 1991, NSE: CYIENT) partners with over 300 customers, including 40% of the top 100 global innovators of 2023, to deliver intelligent engineering and technology solutions for creating a digital, autonomous, and sustainable future. As a company, Cyient is committed to designing a culturally inclusive, socially responsible, and environmentally sustainable Tomorrow Together with our stakeholders.

For more information, please visit www.cyient.com