- 2026-Feb-17

Sensor fusion for ADAS / AD vehicles road safety

Written by Harsha Archak Mar 1, 2023 11:53:57 AM

Cameras, Radars, Lidars, Ultrasonic sensors are the various kinds of sensors used to develop vehicles equipped with Advanced Driver Assistance Systems (ADAS) and Autonomous Vehicles (AV).

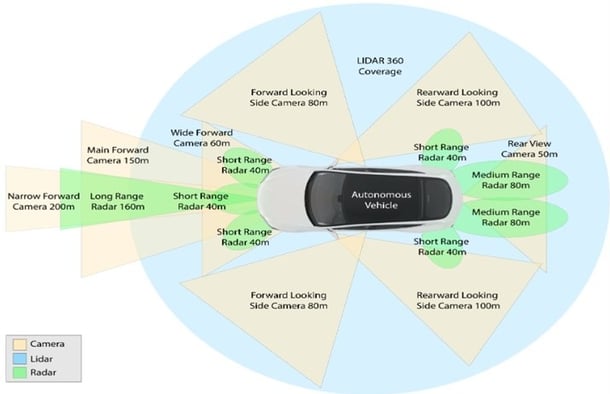

AVs can depend on more than 20 sensors mounted at various locations. Each sensor provides different types of data, such as, cameras provide images, radars and lidars provide point clouds, etc. The Autonomous Driving (AD) software uses information/data from each of these sensors individually and collectively from multiple sensors (Radar + Camera) to make precise driving decisions.

Sensors are the ‘eyes’ of an autonomous vehicle (AV) and they help the vehicle perceive its surroundings, i.e., people, objects, traffic, road trajectory, weather, lighting etc.

This perception is critical, as it enables AV to make the right decisions, i.e., stop, accelerate, turn, reverse, etc.

AVs with higher levels of autonomy (L2+ and above) where autonomous features replace driver tasks, multiple sensors are required to understand the environment correctly.

But every sensor is different and has its own limitations for e.g., camera will work very well for lane detection or object classification whereas a radar may provide good data for long range detection or in different light conditions.

Fig-1: Different sensors in an Autonomous Vehicle assist in making driving decisions

Sensor capabilities

| Capabilities | Camera | Radar | LiDAR |

| Long-range detection | Average | Good | Average |

| Differing lighting conditions | Average | Good | Good |

| Different weather conditions | Poor | Good | Poor |

| Object classification | Good | Poor | Good |

| Stationary object detection | Good | Poor | Good |

Sensor Fusion improves the overall performance capability of an Autonomous Vehicle and there are multiple fusion techniques to choose from, depending on the Operation Design Domain (ODD) feature.

Thus, the AV data collected from multiple sensors is fused using Sensor Fusion techniques, to provide best possible inputs for AVs to take appropriate decisions (brake, accelerate turn, etc.).

Below are a few examples of different types of fusion techniques and depending on the level of autonomy of the AV, one or multiple sensor fusion techniques will be used. For example: for Level 2 feature development only object data, lane fusion, traffic sign fusion may be used as per feature requirement but for Level 3 and above all the techniques of Fusion may be required.

Sensor fusion techniques

| Type of fusion | Type of sensor used |

| Object data fusion for improving information about stationary and moving objects surrounding vehicle | Camera, Radar, Lidar |

| Lane fusion for improving lane information | Camera, HD-map |

| Traffic sign fusion for improving information traffic sign direction | Camera, HD-Map, Lidar |

| Free space fusion for improving information for drivable-space | Camera, Radar, Lidar |

| Localization for vehicle position estimation | HD-map, vehicle sensors, Camera / Lidar |

Sensor fusion complexity and challenges

The complexity of the environment, feature specifications define fusion strategy and type of fusion requirement needs.

Executing sensor fusion is a complex activity, and one should account for numerous challenges, such as:

- Output accuracy varies from different sensors at different FOV (Field of view) region

- Sensors detect false or miss detection (Camera may miss to detect object/lane at night, dusk time, etc.)

- Objects might be in the blind zone of sensors for some time or objects might dynamically move in the blind zone

- here could be multiple detections by the sensor from same objects (e.g., large truck might give multiple reflections from radar)

- Detection confidence could be lower from sensor

- Different sensors give output data at different sampling rate (40 ~ 70 milli-seconds etc.)

- Sensors are mounted at different positions in vehicle

- Sensor performance varies in different environment conditions

These challenges may become more complex with AVs of higher levels. Multiple sensors may be required to get close to a 360-degree coverage and avoid blind spots.

The placement of sensors is also crucial to reduce blind zones and achieve the best performance without compromising safety. This also results in a tradeoff of cost Vs feature requirement contingency.

Additionally, the level of accuracy of every sensor is different and managing the data accuracy of each sensor is critical for the final output. Thus, understanding the problem statement and the environment to account for these challenges is imperative.

High-level Sensor fusion KPI metrics

To ensure high levels of accuracy with Sensor Fusion, one must set the right KPIs to measure, along with a robust design.

Below are some of the identified KPIs to improve data accuracy:

a. Single track for each object

- No false or miss object detection

- Overall accuracy is improved

- Provide confidence level for each output

- Estimate output in sensor blind zone

b. Higher accuracy through design

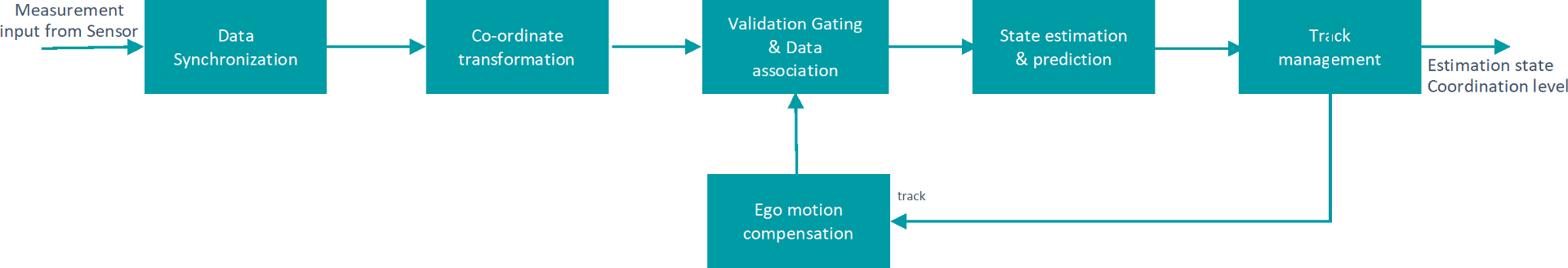

Below is an example of a Sensor Fusion flow with blocks, that can be used to ensure highest levels of accuracy for Object Detection. There can be different architectures depending on the type of fusion, i.e., High Level Fusion, raw Sensor Fusion, etc.

Data Synchronization: Different sensors give different outputs, e.g., camera gives output at 50 milli-seconds and a radar could be giving output at 60 milli-seconds. Data Synchronization technique synchronizes this data before Sensor Fusion is executed

Coordinate Transformation: This is a technique based on geometry and placement of sensors, to bring data from sensors mounted at different locations, into one common frame.

For example, if we consider a car that is 5.5 meters long and 2.5 meters wide vs a truck that is 19 meters long and 5 meters wide, data is required to be brought at rear axle or front axle frame

Validation Gating and Data Association: This is a technique to ensure that multiple data received from each sensor on the vehicle is for the same object, e.g., a truck in front.

Track Management: Track management technique ensures the final output of sensor fusion. It initializes, maintains, and deletes track, based on track history and also calculates track confidence

Ego Motion Estimation: It accounts for the movement of the vehicle. For example, if the data is received in T+100 milliseconds and T+200 milliseconds, but during this time the vehicle has already moved

High-level Sensor fusion design consideration

- Selection of algorithms for data association and estimation technique:

Algorithms for data association : Nearest neighbor, probabilistic, and joint probabilistic Data association estimation technique : Linear Kalman filter, Extended Kalman filter, particle filter. - Fusion strategy:

Data association algorithm and estimation technique will be decided based on the type of sensor used for fusion, state estimation requirement (dynamic/static object estimation) and sensor outputs. - Track management:

To reduce false output, fusion track management needs to build confidence before initializing track. In case of delay, it could lead to latency which will takes more time in action by AD system. Track initialization strategy shall be decided considering operating environment, feature ODD, etc. similar to track deletion. - Filter tuning:

Practical aspect using sensor characterization from real world data improves filter tuning.

Fusion validation

It is important to use the right validation strategy to test fusion with simulated data, real world data or in vehicle testing, thereby ensuring software quality and scenario covers.

Following are the key aspects for fusion validation:

- Edge case Scenario Selection: Since perception improves performance by fusing various sensor data, scenarios shall be carefully selected, considering all possible edge cases. The scenario shall also mention which level of validation is required - simulation, real world or in vehicle. The objective shall be maximum coverage at simulation level to reduce cost and time, without compromising on quality analysis.

- Sensor modeling: To validate fusion in simulation with environment noise effect, high fidelity sensor model is required to mimic the environmental impact on the sensor performance. Since there is ongoing research on high-fidelity modelling, numerous techniques such as data-driven model, physics-based modelling, etc., are being proposed for implementation.

- Validation with real world data: High-fidelity sensor model can adapt environment conditions with up to 75 to 85% accuracy. Since data precision with simulation is not feasible, validating fusion with real world data becomes imperative, for accurate decisions.

- Vehicle testing: The final validation of fusion shall be on vehicle to ensure end-to-end testing at feature level considering actual conditions, sensor latency and actuation delay.

So, fusion is an extremely critical perception component for AD performance, and one must consider key practical aspects and define the right strategy for design and validation, to achieve highest level of maturity of AD software.

Sensor calibration

Since the AV depends on such a wide range of sensors, calibration of these sensors becomes very important. Mis-calibrated sensors can have catastrophic impacts in terms of safety and functionality of the AV. Calibration of individual sensors is a long-drawn-out process, and different sensors i.e., LiDAR, radar or cameras etc. require different calibration methodologies. This calibration is typically carried out at the end of the production line.

The general understanding in the industry is that intrinsic calibrations, i.e., geometric distortion, focal length, etc., do not change over time and are typically done only once. However, extrinsic calibration, i.e., position and orientation, which can change based on various reasons, from poor road conditions to sensors mounted on movable car parts such as the side view mirror, is a challenge. For example, a rear-facing camera mounted on the auto-folding side- view mirror, is used for lane change functionality or to detect the presence of a vehicle in the adjacent lane, a yaw angle miscalibration of 0.5 degrees can cause a lateral error of almost one meter depending on the distance to the vehicle. A lateral error of one meter, could result in a vehicle present in the adjacent lane going undetected.

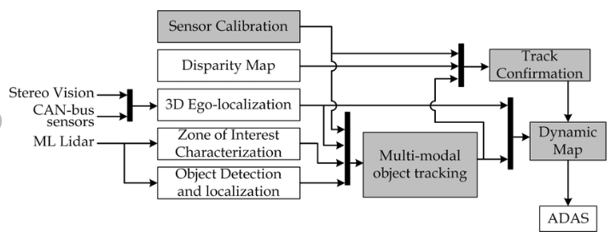

Multi-modal perception block diagram

A multi-modality sensor calibration system requires the following features:

- Self-diagnosis and determination of error required for correction.

- Duration to calibrate must not exceed a few seconds for each sensor.

- Auto-correction of safety-related errors to meet functional safety requirements.

- Determination of an ideal scenario to auto-calibrate.

- Ability to function in both highways and urban scenarios.

- Absence of external human involvement.

Additionally, it is important to calibrate individual sensors and multiple sensors with respect to each other. Multi-modality sensor calibration refers to the calibration of different types of sensors such as Camera with respect to LiDAR or RADAR about LiDAR.

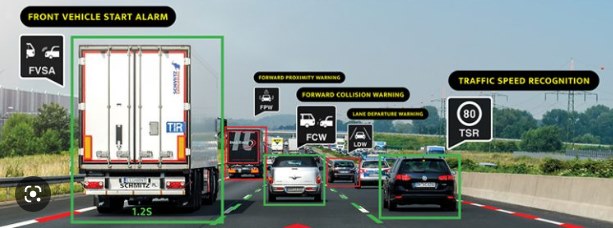

In an AV, features such as Lane Departure Warning (LDW), Forward Collision Warning (FCW), Traffic Sign Recognition (TSR) rely on the front-facing camera. A well-calibrated camera can position the vehicle in the center of the lane and maintain an appropriate distance from the vehicle in the front.

But a minor error in the pitch angle of the camera mounting can result in the vehicle in front appearing further away than it is. It could result in delayed system response to avoid a collision with a vehicle in the front.

“As a Technology Solutions Provider, Cyient works closely with the industry experts, equipment manufacturers, and aftermarket customers to align with automotive industry trends through our focus areas of megatrends "Intelligent Transport and Connected Products," "Augmentation and Human Well-Being," and "Hyper-Automation and Smart Operations."

Solution Accelerator for choosing Cyient as value-add partner

- Toolchain integration, development excellence (e.g., defect resolution time) and ready-to-use Sensor fusion SW stack will become key differentiators, reducing customer development efforts and accelerating time-to-market

- Value-creation for reusable libraries

- End-to-end product engineering (off-the-shelf)

- Scope for single-sourcing solutions and strategic partnerships

- Sustainable solution model as one-time royalty license and / or non-recurring engineering (NRE) efforts

All this ensures that the software life cycle will extend beyond five to seven years after SoP (start-of-production)

About the author

Harsha has over two decades of experience in Automotive & Hi-Tech Industry with expertise in New Product Engineering & Integration Strategy for ADAS & Self-driving applications, vehicle HMI infotainment connectivity solutions and Device Protocol development. Currently, he serves as ADAS / AD Domain SME under Technology Group.

.png?width=774&height=812&name=Master%20final%201%20(1).png)