- 2026-Feb-17

Object Detection and Annotation Tools

Written by Lenka Venkatagiri Babu 13 Feb, 2024

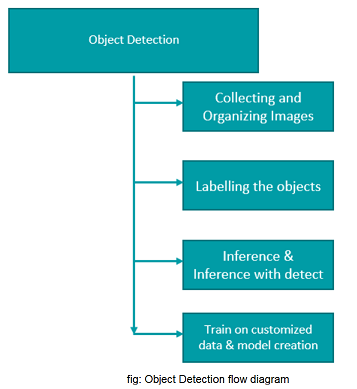

In Advanced Driving Assistance (ADAS) or autonomous driving systems, object detection is a computer vision task that involves identifying and locating objects/obstacles in an image or video. The goal is to recognize what objects are present in the scene and determine their precise locations by drawing bounding boxes around them. Object detection is a crucial component in many applications, including autonomous vehicles, surveillance, alerts, assisting drivers to avoid collisions, image understanding, and augmented reality.

Here are some key concepts and approaches in object detection:

- Image/video capture

- Use cameras to capture images or stream video. These can be traditional RGB cameras, depth cameras, or a combination of both.

- Pre-processing

- Enhance or pre-process the captured images to improve the quality of the data. This might involve resizing, normalizing, or filtering the images.

- Bounding box

- Bounding boxes are rectangular frames that enclose objects in an image. They are defined by the coordinates (x, y) for the top left corner and (width, height) for the dimensions of the box.

- Object detection approaches

- Sliding window: This is a traditional approach where a window of fixed size slides over the entire image, and a classifier is applied to each window to determine whether an object is present.

- Region-based CNNs (R-CNN, Fast R-CNN, Faster R-CNN): These methods propose regions of interest (RoIs) in the image and use convolutional neural networks (CNNs) to classify and refine these regions.

- You Only Look Once (YOLO): YOLO divides the image into a grid and predicts bounding boxes and class probabilities for each grid cell in a single pass. It's known for its real-time processing speed.

- SSingle Shot Multibox Detector (SSD): Similar to YOLO, SSD also predicts multiple bounding boxes for each grid cell at different scales, providing a good balance between speed and accuracy.

- RetinaNet: Introduced the focal loss to address the class imbalance in object detection. It combines a feature pyramid network with a prediction network to handle objects at different scales.

- YOLOv5, YOLOv6, YOLOv8, and beyond: These are further improvements and adaptations of the YOLO architecture, with each version aiming to enhance accuracy, speed, or both.

- Datasets

- Datasets play a crucial role in training and evaluating object detection models. Popular datasets include COCO (common objects in context), Pascal VOC (visual object classes), and Open Images.

- Training the model

- For deep learning approaches, the model needs to be trained on a dataset labeled with the objects of interest. This involves presenting the model with annotated images and adjusting its parameters to improve accuracy.

- Inference

- Apply the trained model to the pre-processed images to identify objects. This involves running the image through the neural network and extracting information about the objects present.

- Evaluation metrics

- Common metrics for evaluating object detection models include precision, recall, average precision (AP), and mean average precision (mAP).

- Transfer learning

- Many object detection models leverage transfer learning by pre-training on large datasets (e.g., ImageNet) and fine-tuning on task-specific datasets. This helps the model generalize well to new object detection tasks with limited labeled data.

- Post-processing

- Refine the output by filtering or post-processing techniques. This may involve removing duplicate detections, applying confidence thresholds, or using non-maximum suppression.

- Visualization

- Display the results or take appropriate actions based on the identified objects. This could include drawing bounding boxes around detected objects, triggering alerts, or controlling devices.

Considerations for Deploying a Detection Model for The ECU Platform

- Real-time processing: Many applications require real-time processing, especially in fields like autonomous vehicles and robotics.

- Hardware acceleration: Some deep learning models may require specialized hardware, such as GPUs or TPUs, for efficient real-time processing.

- Data privacy and security: In surveillance applications, it's important to consider privacy concerns and implement measures to protect sensitive information.

- Robustness: The system should be able to handle various environmental conditions, such as changes in lighting, weather, and occlusions.

- Accuracy vs. speed trade-off: Depending on the application, there might be a need to balance accuracy and speed.

Object detection is a rapidly evolving field, and ongoing research is focused on improving the efficiency, accuracy, and applicability of these systems across diverse domains.

Annotation and Tools

Annotation is a crucial step in the development of machine learning models, especially in the field of computer vision. It involves labeling or marking data to provide supervised learning algorithms with the ground truth, enabling the model to learn and make accurate predictions. The significance of annotation lies in its ability to enhance the quality and reliability of training datasets, leading to more robust and accurate machine learning models. Here are some key aspects of the significance of annotation and the tools used for annotation:

- Supervised learning

- Annotation is essential for supervised learning, where the model learns from labeled examples. It provides the necessary input-output pairs that help the algorithm generalize patterns and make predictions on unseen data.

- Model accuracy

- High-quality annotations contribute to the accuracy of the model. Precise and consistent annotations ensure that the model can correctly identify and classify objects, leading to better performance.

- Diversity of data

- Annotation allows for the creation of diverse datasets that cover a wide range of scenarios and variations. This helps the model generalize well across different conditions, improving its robustness.

- Object localization

- Annotation is crucial for tasks like object detection, where the model needs to classify objects and locate them within an image. Bounding box annotations provide information about the object's position.

- Semantic segmentation

- In tasks like semantic segmentation, where the goal is to segment objects at the pixel level, annotation involves labeling each pixel with the corresponding object class. This is valuable for applications like image understanding and autonomous vehicles.

- Training set quality

- The quality of the training dataset significantly influences the performance of the model. Annotation ensures that the dataset is representative and balanced and covers the full range of variations the model may encounter in real-world scenarios.

Effective annotation is a collaborative process involving domain experts and annotators to ensure that the labeled data accurately represents the real-world scenarios the model will encounter. It plays a foundational role in the success of machine learning applications, particularly in computer vision.

Annotation Tools

- XAnyLabeling

- X-AnyLabeling is a robust annotation tool seamlessly incorporating an AI inference engine alongside an array of sophisticated features.

- Supports inference acceleration using GPU.

- Handles both image and video processing.

- Allows single-frame and batch predictions for all tasks.

- Enables one-click import and export of mainstream label formats such as COCO, VOC, YOLO, DOTA, MOT, and MASK.

- Supports various image annotation styles, including polygons, rectangles, rotated boxes, circles, lines, and points.

- CVAT

- CVAT (computer vision annotation tool) is an open-source annotation tool designed for computer vision tasks, including object detection, image segmentation, and keypoint annotation.

- It enables users to annotate images and videos with bounding boxes, polygons, polylines, points, and more.

- CVAT supports various annotation formats, such as Pascal VOC, COCO, and YOLO, making it compatible with popular object detection frameworks.

- Users can annotate individual images as well as entire video sequences, with support for tracking objects across frames.

- CVAT provides some level of automation for object tracking and annotation, helping to speed up the annotation process.

- Labellmg

- Labellmg is an open-source graphical image annotation tool that is written in Python and uses Qt for its graphical interface.

- It supports various annotation formats, including Pascal VOC and YOLO.

- RectLabel

- RectLabel is a commercial annotation tool for macOS that allows users to label images for object detection and image segmentation tasks.

- It supports various annotation types, including bounding boxes, points, and polygons.

- VGG Image Annotator (VIA)

- VIA is an open-source image annotation tool developed by the Visual Geometry Group (VGG) at the University of Oxford.

- It supports a wide range of annotation types, including bounding boxes, polygons, and points.

- It saves the annotations in a format supported by your object detection model.

- Labelbox

- Labelbox is a cloud-based platform for data labeling, including object detection tasks. It supports collaborative annotation and provides an API for integration into machine learning workflows.

- It also exports the annotations in the desired formats.

- COCO Annotator

- COCO Annotator is an open-source Web-based annotation tool designed specifically for the COCO dataset format.

- It provides an interface for annotating images with bounding boxes and segmentation masks.

When choosing an annotation tool, consider factors such as the annotation formats it supports, ease of use, collaboration features, and whether it aligns with the specific requirements of your project. Additionally, some tools are better suited for individual use, while others are designed for collaborative workflows in larger teams.

About the Author

He has 15 years of experience in evaluating cutting-edge PoC solutions in automotive domain and DSP, embedded multimedia & ADAS domains with expertise in camera-based vision algorithm developments.

.png?width=774&height=812&name=Master%20final%201%20(1).png)