Building an Efficient Face Recognition System

Written by Purushothama Reddy Bandla 29 May, 2024

In today's era of advancing technology, face recognition systems have become integral to various applications ranging from security systems to social media platforms. The ability to accurately identify and authenticate individuals based on facial features has revolutionized numerous industries. Here, we delve into the intricacies of building an efficient face recognition system, exploring the underlying algorithms, techniques, and best practices.

Why Face Recognition is in Demand

With face recognition, we can identify people based on other biometric parameters iris and fingerprints. The significant advantages of automatically identifying people by their faces are apparent. However, a face recognition system is also contactless, easy, and fast. That is why attempts to build a face recognition system with an acceptable level of accuracy are gathering momentum.

Applications of Face Recognition Systems

- Security companies use facial recognition to secure their premises. The most important aspect of this is the capacity to detect illicit access to areas where unauthorized people are prohibited.

- Face recognition can be used by fleet management organizations to safeguard their vehicles.

- Ride-sharing companies can benefit from face recognition software to ensure the correct driver picks up the right passenger.

- Face recognition enhances IoT by providing improved security and automatic access management at home.

- Face recognition may be used by retailers to personalize offline products and, in theory, link online shopping behaviors to offline ones.

- Face recognition is used at immigration checkpoints to impose better border control.

- Security authorities in the US deploy this technology at airports to identify people who have overstayed their visas.

- Stores and shops are installing facial surveillance systems to recognize potential shoplifters’ faces.

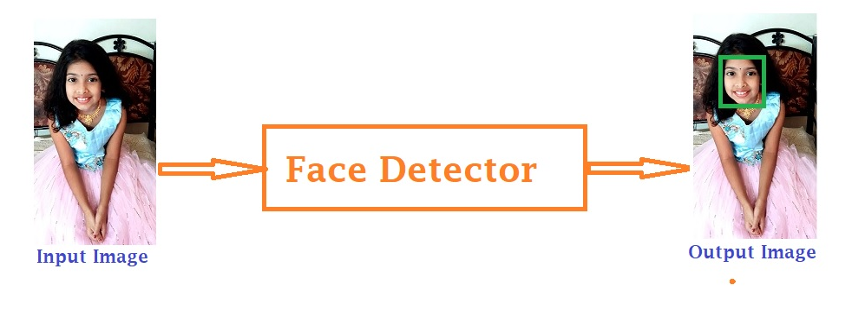

Face Recognition Software Workflow

Face recognition systems utilize computer vision algorithms to identify and authenticate individuals based on facial features extracted from images or video frames.

The process involves the following 12 steps:

- Problem definition and requirements gathering:

Defining the objectives and requirements of the face recognition system. Determining the specific use cases, target audience, and performance metrics.

- Data collection:

Gathering a diverse dataset of face images that represent the variability in the target population. Collecting face images that encompass variations in pose, lighting conditions, facial expressions, and demographics.

- Face detection:

Detecting any faces that appear in the frame. While classic methods such as Haar Cascades and DLib-HOG can be used for face detection, deep learning-based approaches give better performance and accuracy. Deep learning-based models that can be used for face detection include Single Shot Multibox Detector (SSD), Multi-Task Cascaded Convolutional Neural Network (MTCNN), Dual Shot Face Detector (DSFD), Retina Face-Resnet, Retina Face MobilenetV1, Media Pipe, and YuNet.The use of pre-trained models is recommended to save project development time.

- Data preprocessing:

Preprocessing face images to enhance quality and consistency. Common preprocessing steps include alignment and normalization.

Alignment: entails aligning face images to a canonical pose to reduce variations caused by head orientation.

Normalization: entails normalizing images for factors like illumination, contrast, and scale to improve robustness.

- Feature extraction:

Extracting discriminative features from the preprocessed face images. This stage is about identifying key parts of a face. Computer vision uses a highly effective set of 128 facial landmarks for each face, known as an embedding. These measurements have been found using machine learning by comparing thousands of images of labeled faces. We can then access a pre-trained network generated by this process to find the necessary measurements. These landmarks are used to compare two images.

The traditional method DLib-HOG uses 28,68 or 128 landmarks from the face.

CNN model Sface uses only 5 landmarks from the face, as shown above.

Feature extraction typically uses traditional methods and deep learning approaches. Traditional methods comprise Principal Component Analysis (PCA), Linear Discriminant Analysis (LDA), Local Binary Patterns (LBP), and Histogram of Oriented Gradients (HOG). Deep learning-based approaches use convolutional neural networks (CNNs) trained on large-scale face datasets, such as the Sface model. It is observed that the 5 landmark Sface model performs better than the HOG 68 landmarks in comparing two images. At the same time more landmark points are required to recognize faces even when mouth covered with mask or eyes covered with spectacles etc. Also, more landmarks may be required for applications like drowsiness detection or face feelings recognition. So trained CNN model with more landmark’s points must be preferred over traditional methods for better performance and accuracy. Increase in landmark points increases accuracy and performance but also increases the complexity and size of the model.

- Face recognition:

Once the face landmarks are extracted for both the database images and the recognized face, they are compared to determine their similarity or dissimilarity. Standard methods for comparing face embeddings include cosine similarity, Euclidean distance and L1 distance (Manhattan Distance). Cosine similarity is often preferred for face recognition systems based on deep learning embeddings. For traditional feature-based approaches, L1 distance may be more suitable.

- Model training:

Training a face recognition model using the extracted features and annotated training data. Common algorithms include Support Vector Machines (SVMs), k-Nearest Neighbors (kNN), and Random Forests. Deep learning architectures include Siamese networks, Triplet networks, and variations of CNNs.

- Model evaluation and validation:

Evaluating the performance of the trained model using validation datasets and appropriate evaluation metrics (e.g., accuracy, precision, recall, F1-score). Further, cross-validation is done to assess the model's generalization ability and robustness to unseen data.

- Model optimization and fine-tuning:

Optimizing the model's hyperparameters and architecture to improve performance and efficiency. The model is fine-tuned on specific datasets or tasks to adapt it to the target domain and mitigate overfitting.

- Deployment and integration:

Integrating the trained model into the target application or system, which may involve: Developing APIs or SDKs for seamless integration with other software components. Ensuring compatibility with different platforms (e.g., desktop, mobile, edge devices)Implementing real-time processing capabilities for latency-sensitive applications.

- Testing and quality assurance:

Conducting thorough testing to validate the functionality, performance, and reliability of the deployed system. Unit testing, integration testing, and end-to-end testing are undertaken to identify and address potential issues and bugs.

- Deployment and maintenance:

Deploying the face recognition system in production environments and monitoring its performance and usage. Mechanisms for logging, monitoring, and error handling are implemented to facilitate troubleshooting and maintenance. Based on user feedback, the system is regularly updated and improved to keep pace with technological advancements and changes in regulatory compliance.

Facial Recognition Technology Standards:

Facial recognition technology standards are crucial in ensuring interoperability, reliability, and ethical use of facial recognition systems. Several organizations are developing standards related to facial recognition technology. Here are some notable ones:

- ISO/IEC JTC 1/SC 37.

- National Institute of Standards and Technology (NIST).

- European Committee for Standardization (CEN).

- International Biometric Performance Testing Conferences (IBPC)

- Ethical Guidelines and Regulations:

In addition to technical standards, various organizations and governments have developed ethical guidelines and regulations for the use of facial recognition technology. For example, the European Union's General Data Protection Regulation (GDPR) and the California Consumer Privacy Act (CCPA) impose restrictions on collecting, storing, and processing biometric data, including facial images.

By following this facial recognition software workflow, developers can build and deploy robust face recognition software that meets the needs of various applications, including security, surveillance, biometrics, and human-computer interaction.

About the Author

Purushothama Reddy Bandla is a software architect/developer and has pursued his master’s in VLSI Design. He has over 15 years of embedded and automotive industry experience, with an interest in building new end-to-end AI solutions that accelerate human well-being and developing different autonomous models for human-friendly interactive machines in the automotive and agriculture fields.

.png?width=774&height=812&name=Master%20final%201%20(1).png)